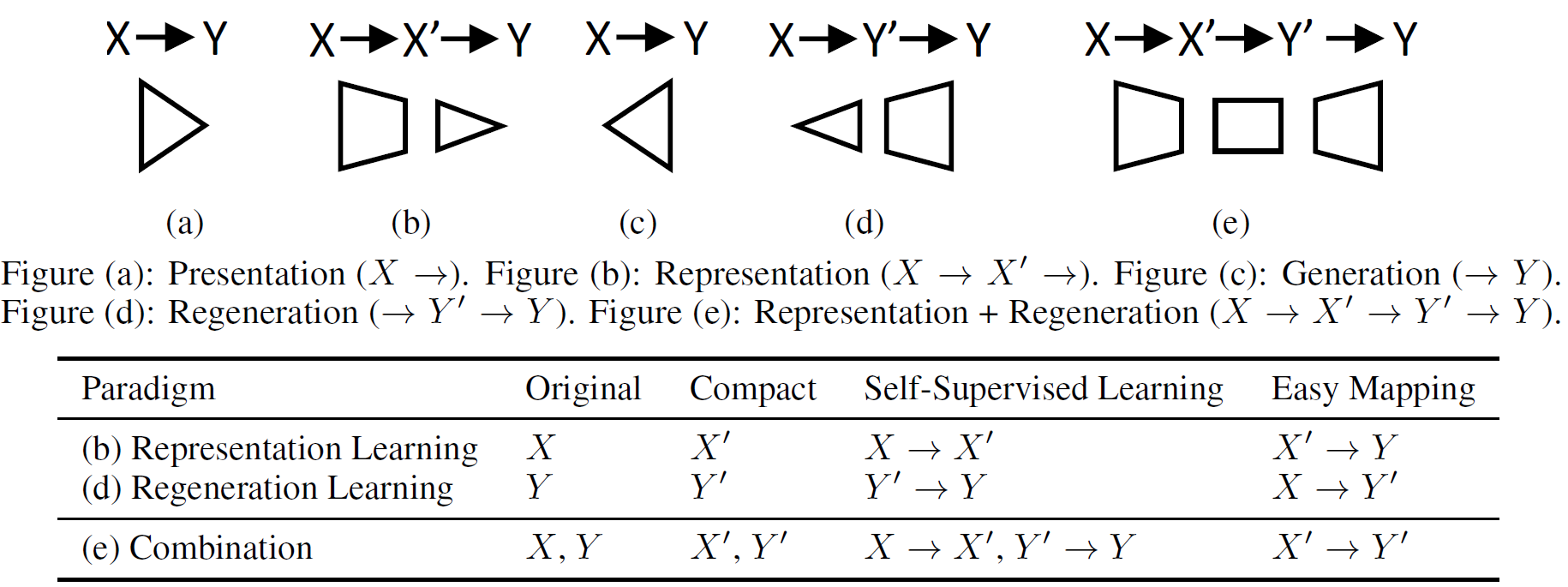

Regeneration Learning

Regeneration Learning: A Learning Paradigm for Data Generation

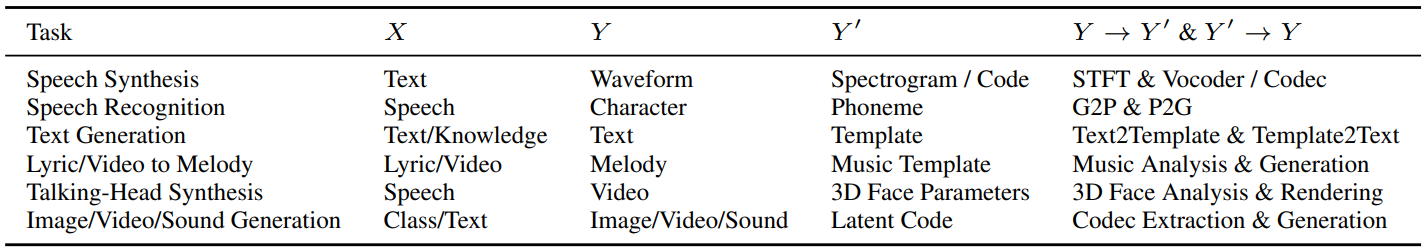

Transformer Architecture

ResiDual: Transformer with Dual Residual Connections

A Study on ReLU and Softmax in Transformer

Transformer as Memories

Transformer: A Study on ReLU and Softmax in Transformer

Speech Synthesis: NaturalSpeech (MemoryVAE)

Speech Synthesis: AdaSpeech 4 (Speaker Memory)

Text Generation: nkNN-NMT (n-gram kNN Memory)

Text Generation: Extract and Attend (Dictionary as Memory)

Talking-Face Video Synthesis: MemFace (Memories as One-to-Many Alleviators)

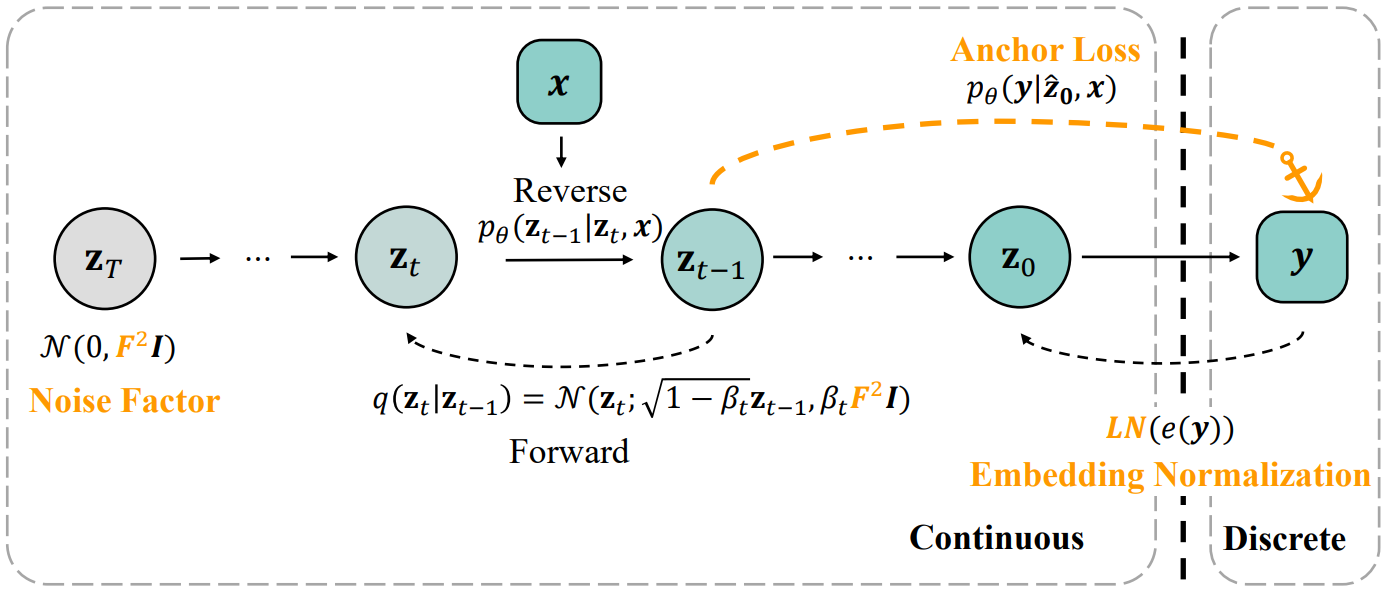

Diffusion Models

Speedup: PriorGrad, InferGrad, ResGrad

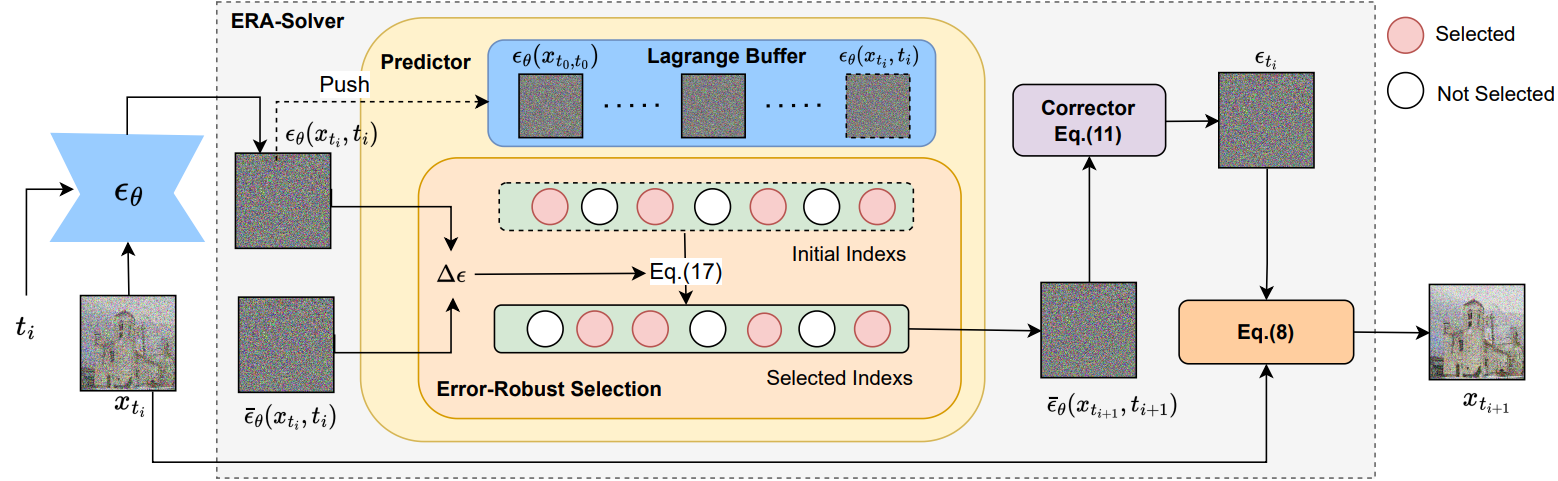

Speedup: ERA-Solver